Generative Visual (4)

Abstract

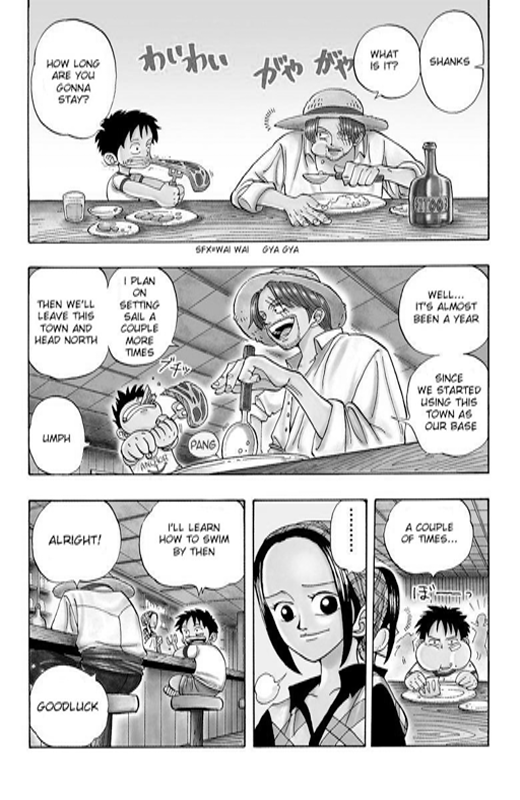

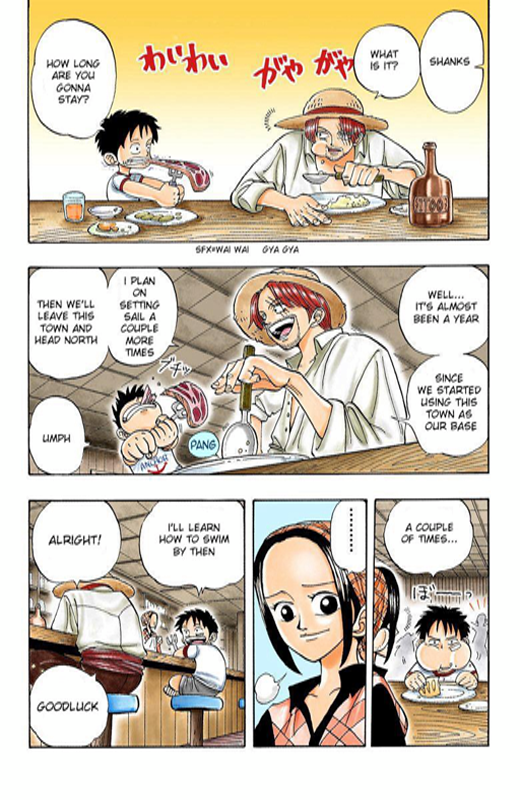

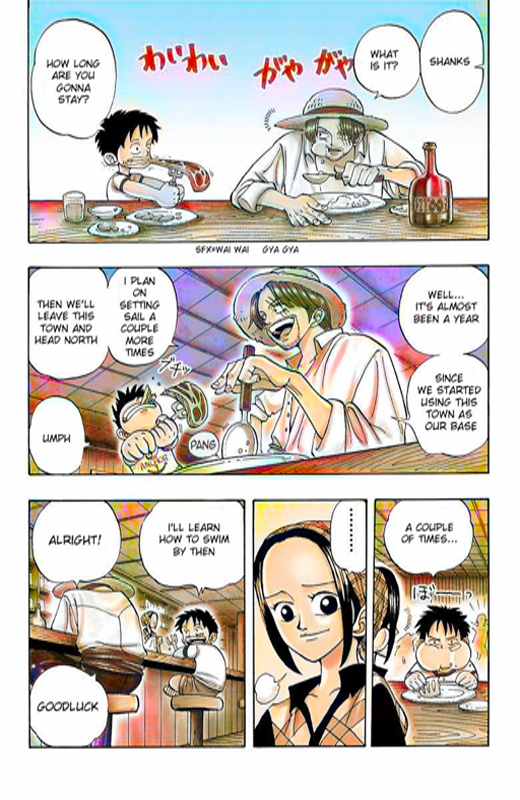

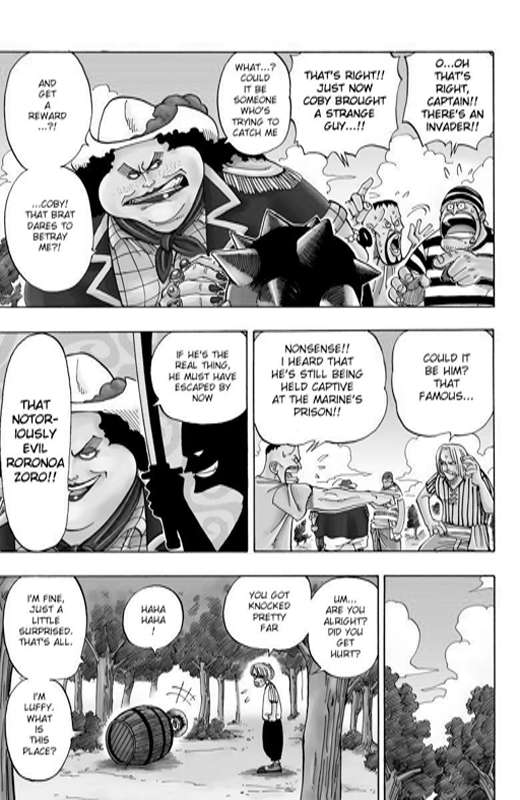

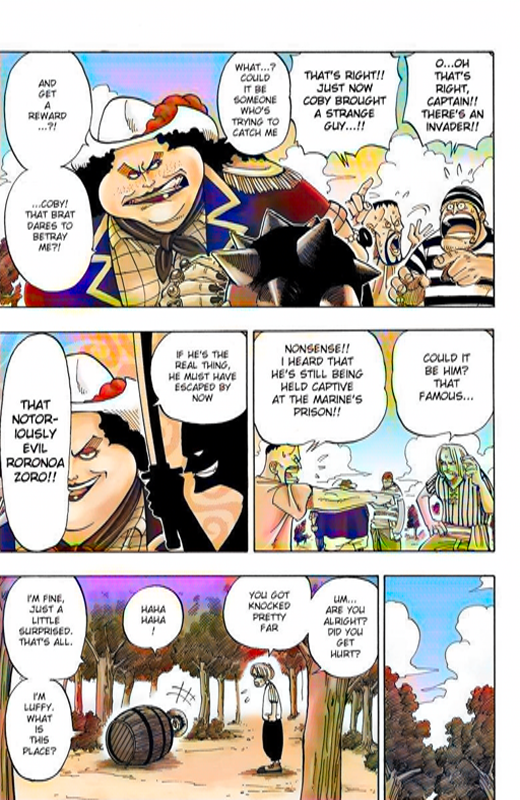

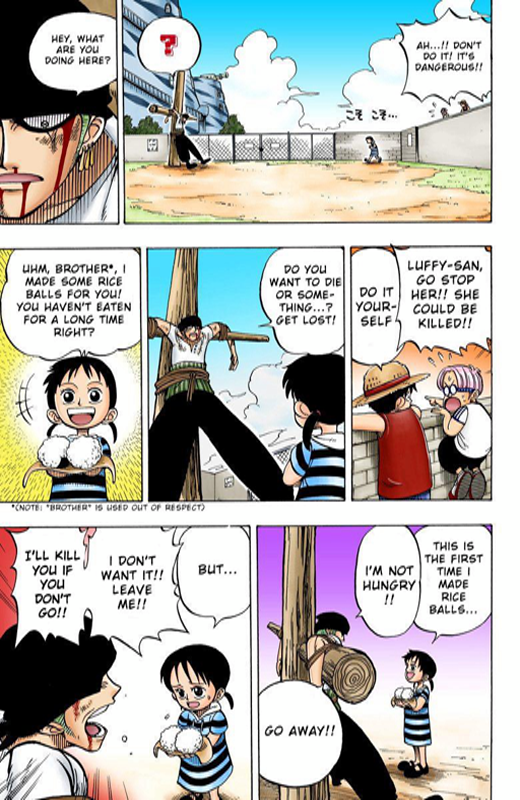

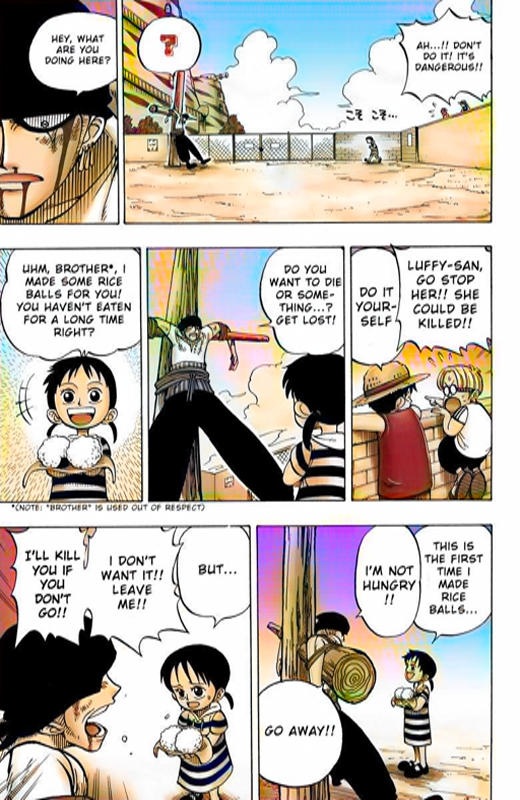

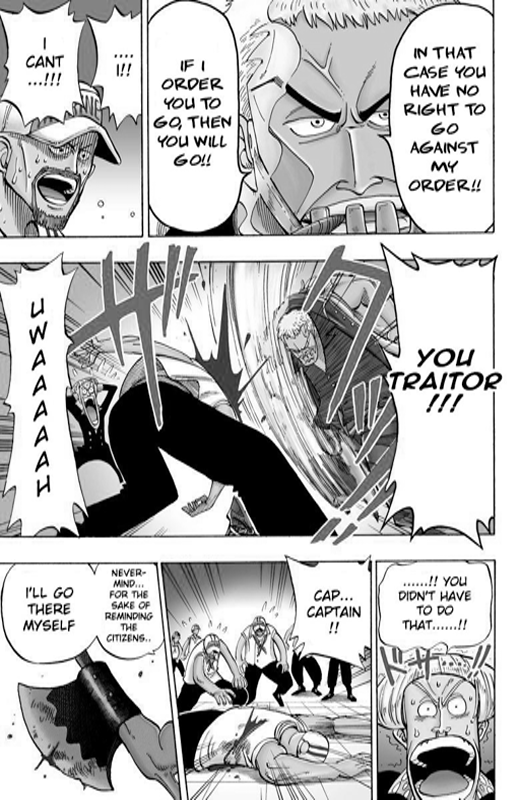

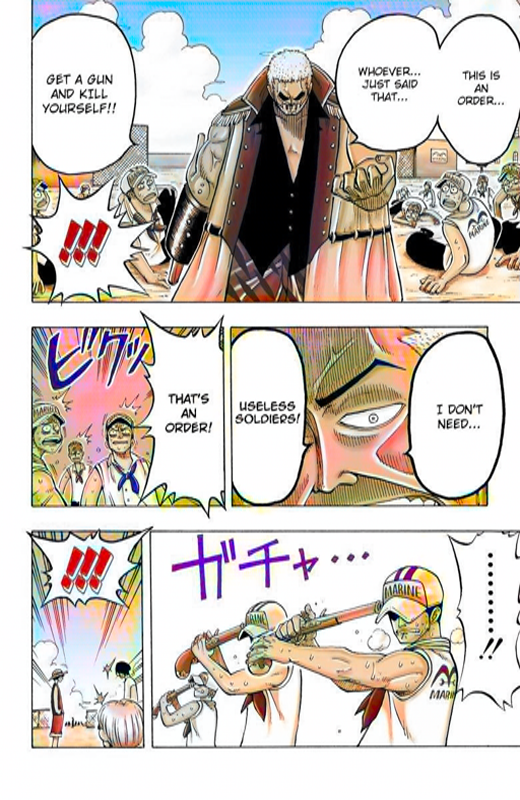

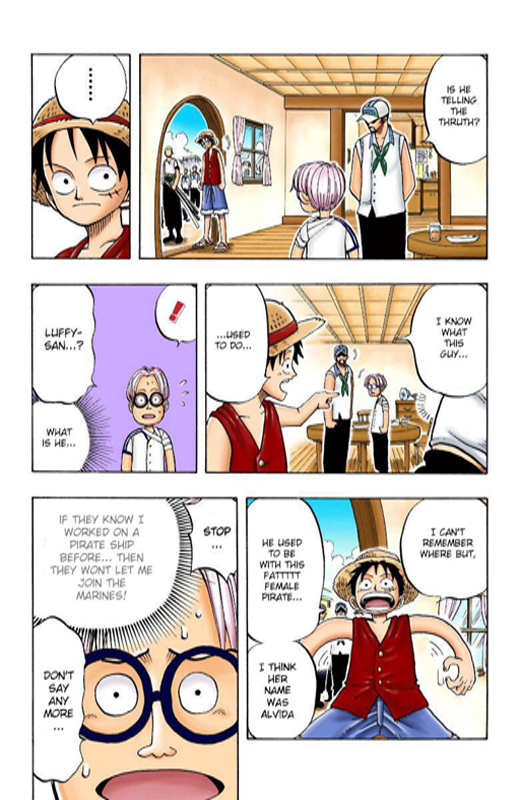

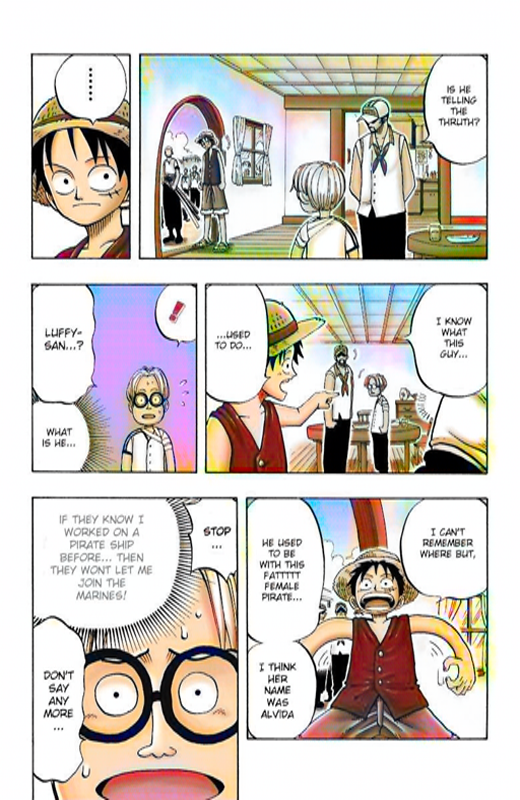

As a manga reader, I would be more delight to read colorized ones than gray-scaled ones. However, it takes much more additional efforts for manga creators to colorize. What if we have an auto painter that convert b&w manga into colorized ones? In this project, I developed a manga colorization solution based on pix2pix model. It is capabale of colorizing b&w manga pages based on some colorized samples. The future direction is to correct some inaccurate results and make them more natural.

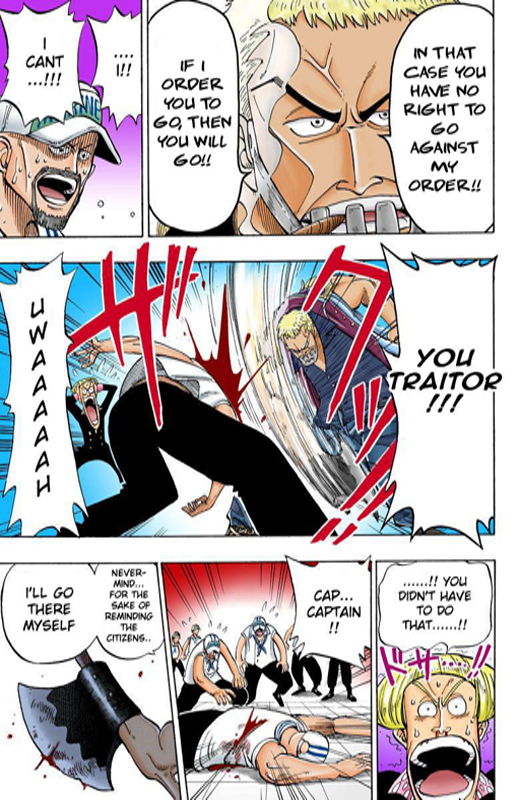

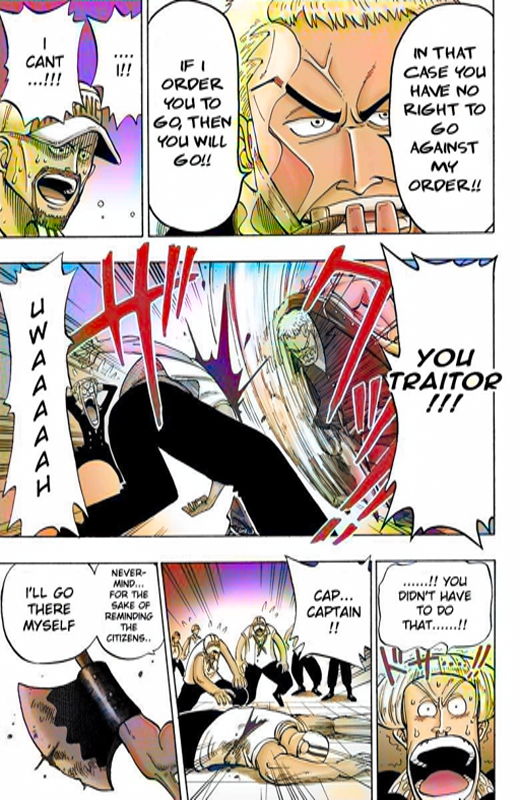

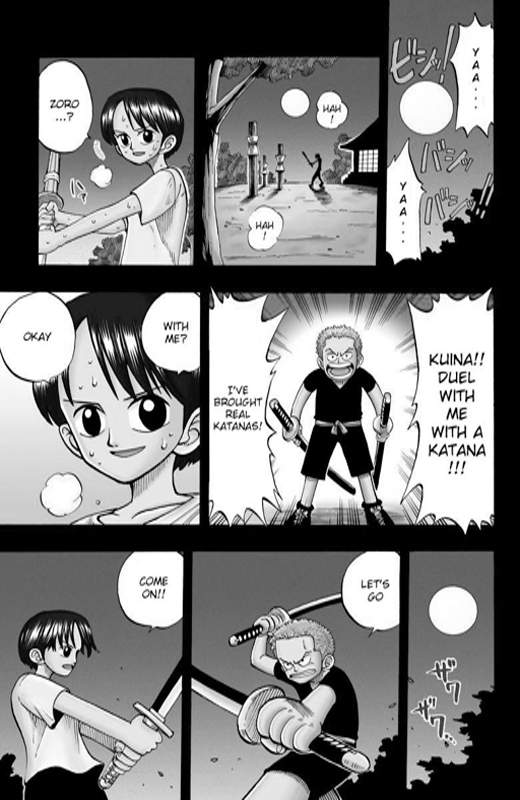

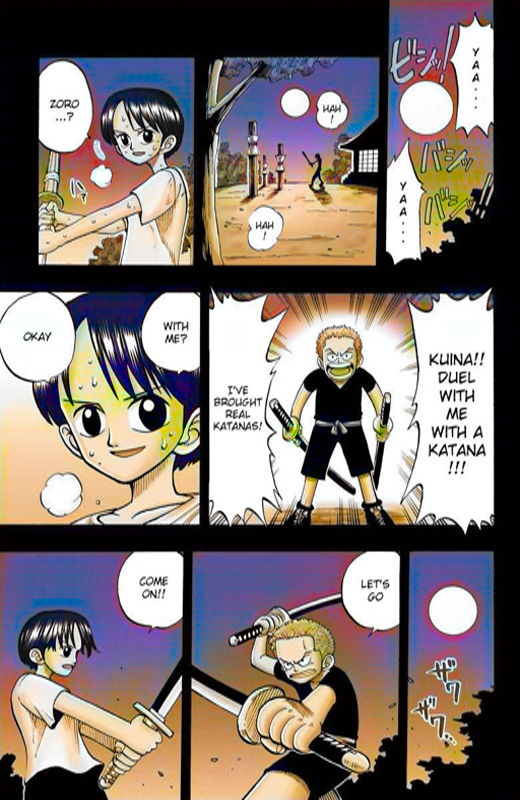

Results

Trained on ~150 Onepiece manga pages. Should be better if had more training data.

| real_A | real_B | fake_B |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Model/Data

- github link

- Takes ~10 min to train the model.

- Training data is included in datasets/onepiece/A/.

Code

- manga_colorization.ipynb: run this to simply regenerate the demonstrated results.

For various usage:

train.py:

1

python train.py --dataroot path/to/datasets --name GIVE_A_NAME --model pix2pix --direction BtoAtest.py:

1

python test.py --dataroot same/path/as/above --name SAME_NAME_AS_ABOVE --model pix2pix --direction BtoATechnical Notes

Tested on Datahub.